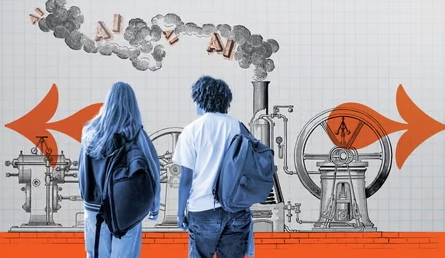

For many students, ChatGPT has become as common as a notebook or calculator. Whether it is fixing grammar, organising revision notes, or generating flashcards, the AI tool is quickly turning into a trusted companion in university life.

But campuses are still drawing the line. Using ChatGPT to understand material? Fine. Using it to write your assignments? Not allowed.

A recent report from the Higher Education Policy Institute found that 92 percent of students now use some form of generative AI, up from 66 percent last year.

Megan Chen, a master’s student in technology policy at Cambridge, says AI is everywhere. On TikTok, she shares her favorite AI study hacks, from chat-based study sessions to smart note-sorting tips.

“At first, people thought ChatGPT was just cheating and that it would hurt our critical thinking,” she said. “Now, it feels like a study partner, something you can talk to and learn from.” Some even call it simply “Chat.”

Used wisely, ChatGPT can be a powerful self-study tool. Chen recommends asking it to create practice exam questions or tidy up lecture notes. “You can have a dialogue with it like you would with a professor,” she said, adding that it can also generate diagrams and summaries of tough topics.

OpenAI’s head of international education, Gina Dewani, agrees. “You can upload course slides and ask multiple-choice questions,” she explained. “It helps break down complex tasks and clarify concepts.”

Still, experts warn against over-reliance. Chen and her peers use what they call the “push-back method.” When ChatGPT gives an answer, they challenge it by asking how someone else might see the problem differently. “It should be one perspective, not the only voice,” Chen said.

Universities are responding with mixed approaches. Many welcome responsible use, but educators remain concerned about misuse. Graham Wynn, pro vice-chancellor of education at Northumbria University, says students must not rely on AI for knowledge or content. “They can quickly get into trouble with fake references and invented material,” he said.

Like many campuses, Northumbria uses AI detectors to flag suspicious submissions. At University of the Arts London, students must log their AI use to show how it fits into their individual creative process.

The technology is evolving fast. The same tools students are experimenting with now are already common in workplaces they will soon enter. But universities stress that learning is not only about results, it is about the process.

“AI literacy is now a core skill for students,” said a UAL spokesperson. “Approach it with curiosity and awareness.”